2장 이미지분류 (CNN)

tensorflow를 사용한 CNN 구현 코드:

모델의 convolution layer층은 2개이며 각각은 64, 128개의 filter를 갖는다. 내 노트북으로는 RAM메모리가 부족하여 연산이 안된다. 코드만 개재.

추가로 tensorboard를 사용하여 시각화

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 |

from tensorflow.examples.tutorials.mnist import input_data import tensorflow as tf mnist_data = input_data.read_data_sets('MNIST_data', one_hot=True) input_size = 784 no_classes = 10 batch_size = 100 total_batches = 200 x_input = tf.placeholder(tf.float32, shape=[None, input_size]) y_input = tf.placeholder(tf.float32, shape=[None, no_classes]) x_input_reshape = tf.reshape(x_input, [-1,28,28,1], name='input_reshape') def add_variable_summary(tf_variable, summary_name): with tf.name_scope(summary_name + '_summary'): mean = tf.reduce_mean(tf_variable) tf.summary.scalar('Mean', mean) with tf.name_scope('standard_deviation'): standard_deviation = tf.sqrt(tf.reduce_mean( tf.square(tf_variable - mean))) tf.summary.scalar('StandardDeviation', standard_deviation) tf.summary.scalar('Maximum', tf.reduce_max(tf_variable)) tf.summary.scalar('Minimum', tf.reduce_min(tf_variable)) tf.summary.histogram('Histogram', tf_variable) def convolution_layer(input_layer, filters, kernel_size=[3,3], activation=tf.nn.relu): layer = tf.layers.conv2d( inputs=input_layer, filters=filters, kernel_size=kernel_size, activation=activation, ) add_variable_summary(layer, 'convolution') return layer def pooling_layer(input_layer, pool_size=[2,2], strides=2): layer = tf.layers.max_pooling2d( inputs=input_layer, pool_size=pool_size, strides=strides ) add_variable_summary(layer, 'pooling') return layer def dense_layer(input_layer, units, activation=tf.nn.relu): layer = tf.layers.dense( inputs=input_layer, units=units, activation=activation ) add_variable_summary(layer, 'dense') return layer convolution_layer_1 = convolution_layer(x_input_reshape, 64) pooling_layer_1 = pooling_layer(convolution_layer_1) convolution_layer_2 = convolution_layer(pooling_layer_1, 128) pooling_layer_2 = pooling_layer(convolution_layer_2) flattened_pool = tf.reshape(pooling_layer_2, [-1,5 * 5 * 128], name='flattened_pool') dense_layer_bottleneck = dense_layer(flattened_pool, 1024) dropout_bool = tf.placeholder(tf.bool) dropout_layer = tf.layers.dropout( inputs=dense_layer_bottleneck, rate=0.4, training=dropout_bool ) logits = dense_layer(dropout_layer, no_classes) with tf.name_scope('loss'): softmax_cross_entropy = tf.nn.softmax_cross_entropy_with_logits( labels=y_input, logits=logits) loss_operation = tf.reduce_mean(softmax_cross_entropy, name='loss') tf.summary.scalar('loss', loss_operation) with tf.name_scope('optimiser'): optimiser = tf.train.AdamOptimizer().minimize(loss_operation) with tf.name_scope('accuracy'): with tf.name_scope('correct_prediction'): predictions = tf.argmax(logits, 1) correct_predictions = tf.equal(predictions, tf.argmax(y_input, 1)) with tf.name_scope('accuracy'): accuracy_operation = tf.reduce_mean( tf.cast(correct_predictions, tf.float32)) tf.summary.scalar('accuracy', accuracy_operation) session = tf.Session() session.run(tf.global_variables_initializer()) merged_summary_operation = tf.summary.merge_all() train_summary_writer = tf.summary.FileWriter('/tmp/train', session.graph) test_summary_writer = tf.summary.FileWriter('/tmp/test') test_images, test_labels = mnist_data.test.images, mnist_data.test.labels for batch_no in range(total_batches): mnist_batch = mnist_data.train.next_batch(batch_size) train_images, train_labels = mnist_batch[0], mnist_batch[1] _, merged_summary = session.run([optimiser, merged_summary_operation], feed_dict={ x_input: train_images, y_input: train_labels, dropout_bool : True }) train_summary_writer.add_summary(merged_summary, batch_no) if batch_no % 10 == 0: merged_summary, _ = session.run([merged_summary_operation, accuracy_operation], feed_dict={ x_input: test_images, y_input: test_labels, dropout_bool : False }) test_summary_writer.add_summary(merged_summary, batch_no) |

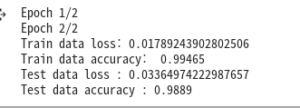

keras를 이용한 CNN 모델 코드 :

epochs = 2 를 주었으며, 진행은 convolution layer 두 번, Max pool (2×2) , Dropout 30%, Flatten, Fully connected layer 1024개 가중치, Dropout 30%, Fully connected layer 10개 softmax 순으로 모델 구성

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 |

from tensorflow import keras import tensorflow as tf import numpy as np batch_size = 128 no_classes = 10 epochs = 2 image_height, image_width = 28, 28 (x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data() x_train = x_train.reshape(x_train.shape[0], image_height,image_width, 1) x_test = x_test.reshape(x_test.shape[0], image_height, image_width, 1) input_shape = (image_height, image_width, 1) x_train = x_train.astype('float32') x_test = x_test.astype('float32') x_train /= 255. x_test /= 255. y_train = tf.keras.utils.to_categorical(y_train, no_classes) y_test = tf.keras.utils.to_categorical(y_test, no_classes) def simple_cnn(input_shape): model = tf.keras.models.Sequential() model.add(tf.keras.layers.Conv2D( filters=64, kernel_size=(3,3), activation='relu', input_shape=input_shape )) model.add(tf.keras.layers.Conv2D( filters=128, kernel_size=(3,3), activation='relu' )) model.add(tf.keras.layers.MaxPooling2D(pool_size=(2,2))) model.add(tf.keras.layers.Dropout(rate=0.3)) model.add(tf.keras.layers.Flatten()) model.add(tf.keras.layers.Dense(units=1024, activation='relu')) model.add(tf.keras.layers.Dropout(rate=0.3)) model.add(tf.keras.layers.Dense(units=no_classes, activation='softmax')) model.compile(loss=tf.keras.losses.categorical_crossentropy, optimizer=tf.keras.optimizers.Adam(), metrics=['accuracy']) return model simple_cnn_model = simple_cnn(input_shape) simple_cnn_model.fit(x_train, y_train, batch_size, epochs, (x_test, y_test)) train_loss, train_accuracy = simple_cnn_model.evaluate(x_train, y_train, verbose=0) print('Train data loss:', train_loss) print('Train data accuracy: ',train_accuracy) test_loss, test_accuracy = simple_cnn_model.evaluate( x_test, y_test, verbose=0) print('Test data loss :', test_loss) print('Test data accuracy :', test_accuracy) |

keras를 이용한 CIFAR-10 데이터셋 분류 :

model의 구조는 기본적인 CNN 구조를 사용했음. ( convolution layer 두 번( 각각 filter = 32, 64) , Maxpooling(2×2), Flatten을 거친 후 FC(fully connected layer) 1024, 10 두 층 사용

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 |

from tensorflow import keras import tensorflow as tf import numpy as np (x_train, y_train), (x_test, y_test) = tf.keras.datasets.cifar10.load_data() row = 32 col = 32 epochs = 2 num_classes = 10 x_train = x_train.astype('float32') x_test = x_train.astype('float32') x_train /= 255. x_test /= 255. y_train = tf.keras.utils.to_categorical(y_train, num_classes) y_test = tf.keras.utils.to_categorical(y_test, num_classes) input_shape = (row,col,3) def simple_cnn(input_shape): model = tf.keras.models.Sequential() model.add(tf.keras.layers.Conv2D( filters=32, kernel_size=(3,3), activation='relu', input_shape=input_shape )) model.add(tf.keras.layers.Conv2D( filters=64, kernel_size=(3,3), activation='relu' )) model.add(tf.keras.layers.MaxPooling2D( pool_size=(2,2))) model.add(tf.keras.layers.Flatten()) model.add(tf.keras.layers.Dense(units=1024,activation='relu')) model.add(tf.keras.layers.Dense(units=num_classes, activation='softmax')) model.compile(loss=tf.keras.losses.categorical_crossentropy, optimizer=tf.keras.optimizers.Adam(), metrics=['accuracy']) return model simple_cnn_model = simple_cnn(input_shape=input_shape) simple_cnn_model.fit(x_train, y_train, 128,epochs, (x_test,y_test)) train_loss, train_accuracy = simple_cnn_model.evaluate(x_train, y_train, verbose=0) print('Train data loss: ', train_loss) print('Train data accuracy : ', train_accuracy) test_loss , test_accuracy = simple_cnn_model.evaluate( x_test, y_test, verbose=0) print('Test data loss:',test_loss) print('Test data accuracy:',test_accuracy) |

![]()